Project Findings

The complete sets of findings can be found in the project report, available here. Below is a summary of the most important findings.

Coverage and level

An important finding is that many tasks address a given learning outcome in part for different reasons:

- A task may address only some sub-points of a stated learning outcome (e.g. CTLO 1.1, but not 1.2)

- The task may address a learning outcome at an insufficient depth, i.e. below the threshold level expectation for a graduate

- The task may address a learning outcome at an insufficient breadth to satisfy the full graduate level expectation (for example, no tasks could address the full range of chemistry content knowledge expected to be covered over a whole degree)

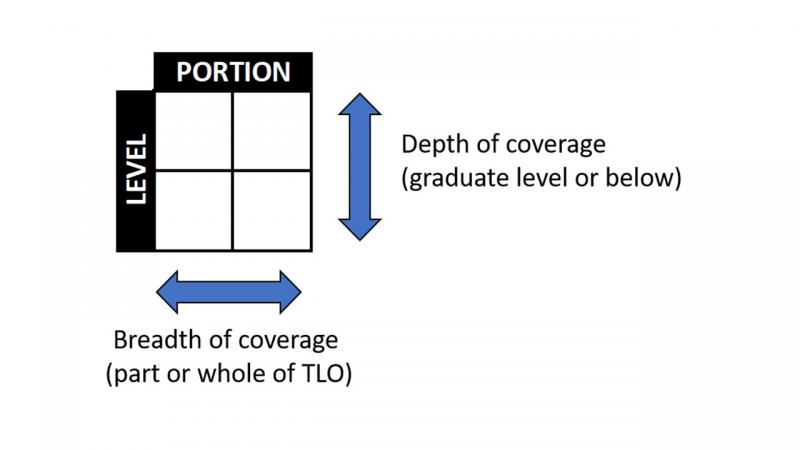

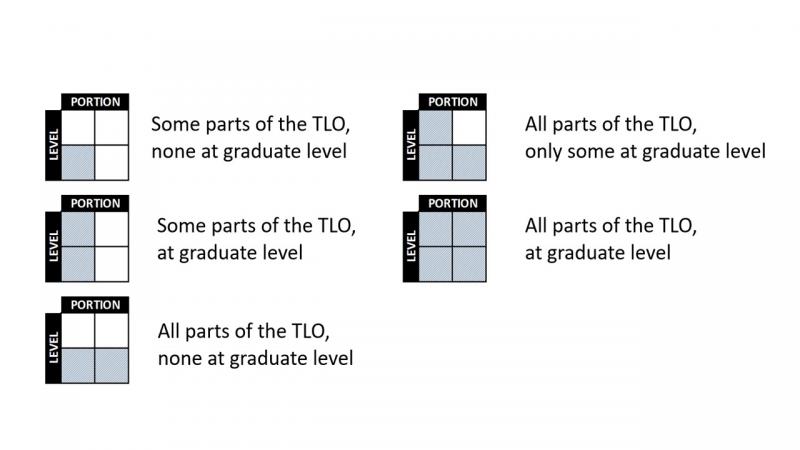

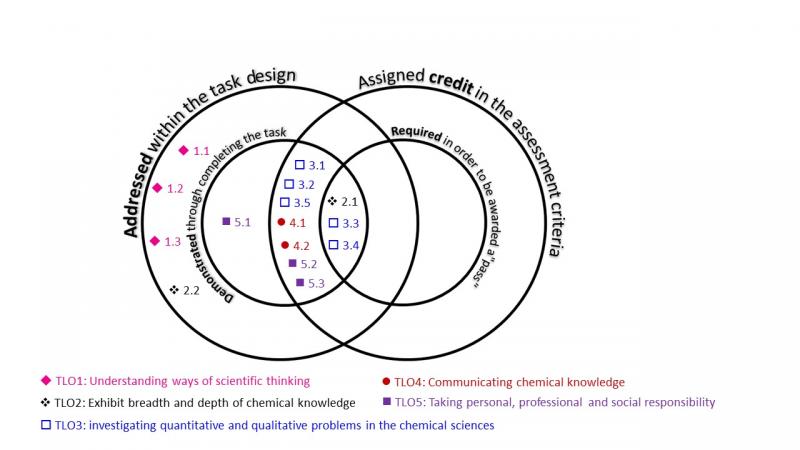

To resolve these issues, the response format was changed to allow for partial CTLO coverage for reasons of either depth, breadth or both independently. This was achieved by using a “four-square” response format as follows:

This leads to the following set of outcomes when measuring a task against a specific learning outcome:

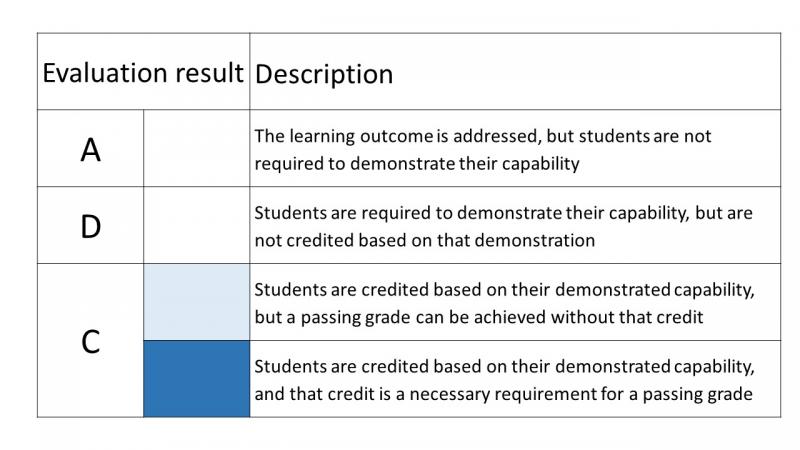

In addition to evaluating the coverage of the TLO by the task when credit is given to students for demonstrating the TLO, the tasks were ranked using the following set of criteria.

This gives a fine grained evaluation of tasks.

Coverage of CTLOs by submitted assessment tasks

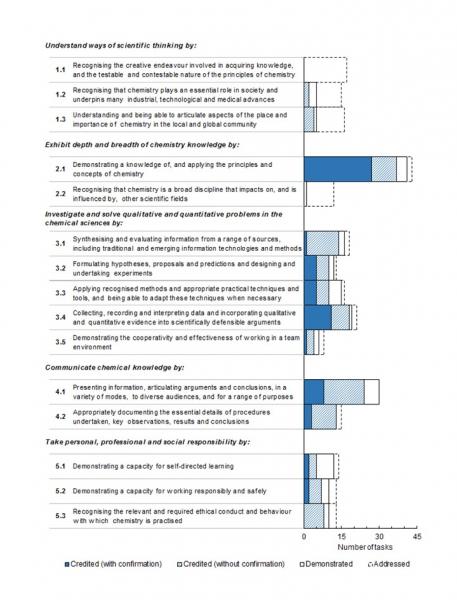

The following figure summarises the consensus of the project team on CTLO coverage within the tasks submitted to the project.

It can be seen that among the 45 submitted tasks, all of the CTLOs are addressed or demonstrated and most credited in at least some tasks. However, fewer tasks were found to include confirmation of attainment of the CTLO (dark blue or pale blue bars). This is because aggregated marks can often carry a student over the “pass” line without confirming any single CTLO specifically. As would be expected, coverage of CTLO 2.1 (“body of knowledge”) was widely found.

This data has been summarised into a bullseye chart in the following diagram.

Importantly, for all three parts of CTLO 1 as well as for CTLOs 2.2 and 5.3, no tasks were submitted which confirm student attainment. In fact, for CTLOs 1.1 and 2.2, no tasks were submitted where credit is given. The project team did find more than ten tasks that address each part of CTLO 1 and CTLO 2.2, but in very few of these tasks were students given the opportunity to demonstrate the CTLO or given credit for doing so. Exemplars for these TLOs can be found here.

Inaccurate task evaluations

Task submitters often claimed that particular CTLOs were assessed in their tasks, when in reality, students had no opportunity to demonstrate these within their work. Instead, the task often served only as a learning experience relevant to the CTLO, not an evaluation of student capability. Consequently, task evaluations resulted in claims that a task ‘ticked the box’ for assessing a particular CTLO simply because the task gave students an opportunity to engage with it, not because the task assessed student capability. This is unsatisfactory under the new paradigm where attainment of learning outcomes must be confirmed. A key factor shaping the task evaluation procedure was the recognition that two very different interpretations of “assessing” a CTLO exist, and were frequently conflated:

1. Providing students with a task to complete which relates to the CTLO, vs.

2. Making judgement of student capability with respect to the CTLO.

Historically, assessment practices have allowed that the first may be fulfilled in the absence of the second. Tasks which serve as learning experiences will inherently provide students with the opportunity to develop skills (provide the relevant inputs), but may not necessarily demand any specific demonstration of CTLO attainment (require sufficient outputs). Only the second definition fulfils the new HESF requirement that methods of assessment must be capable of confirming CTLO attainment.

A related issue was a considerable lack of detail in marking guidelines of many tasks. As a consequence, evaluators could not be confident that particular CTLOs were required of students in those cases. The importance of clear, detailed marking guidelines is emphasised in the exemplars.

Difficulties with wording of some TLOs

Many of the CTLOs have multiple parts that require quite different tasks from students in order to be confirmed. This resulted in frequent "partial" coverage by tasks, which is not ideal because careful reading is then required to determine which part is missing. Thus, some of the CTLOs may need to be revisited for application.

Purpose of assessment

Widespread awareness of and deeper thinking in relation to the purpose of assessment, extending beyond the project team into the Chemistry academic community in Australia. The outcome of expanded appreciation of the importance and complexity of assessment was achieved in parallel and intertwined with the process of developing and refining the evaluation tool, through professional development workshops and dissemination of project findings at conferences. The significance of Chemistry academics taking ownership of a new approach to embedding assessment cannot be emphasised too strongly.

Unless otherwise noted, content on this site is licensed under the Creative Commons Attribution-ShareAlike 4.0 International License

Unless otherwise noted, content on this site is licensed under the Creative Commons Attribution-ShareAlike 4.0 International License